Frequently Asked Questions about GitLab merge request handling

- I got an email about a failed pipeline, how do I find the failure?

- What is

{realtime,automotive}_checkand what should I do when it fails? - How do I send built kernels to partners?

- A test failed and I don’t understand why!

- Why is my test/pipeline taking so long?

- How do I retry a job?

- How do I retry a pipeline?

- Steps for developers to follow to get a green check mark?

- How to customize test runs?

- I need to keep the artifacts for longer

- I need to regenerate the artifacts

- Where do I reach out about any problems?

I got an email about a failed pipeline, how do I find the failure?

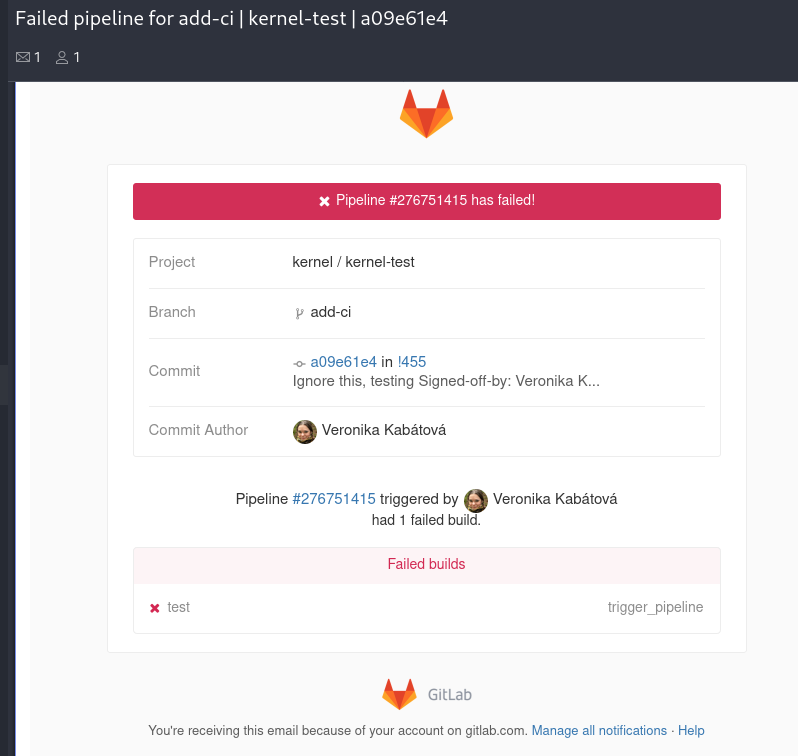

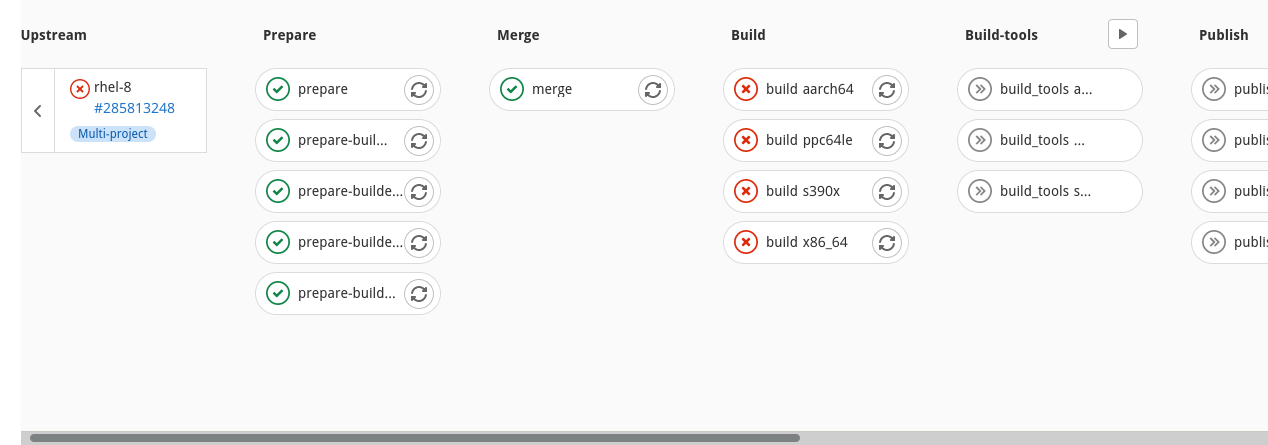

Click on the pipeline ID in the email (in the “Pipeline #276751415 triggered” part in the example). This will bring you to the generic pipeline view:

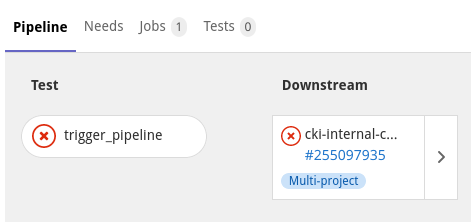

Click on the right arrow to unpack the pipeline:

Note that you may have to scroll to the side to see the full pipeline visualisation and find the failures!

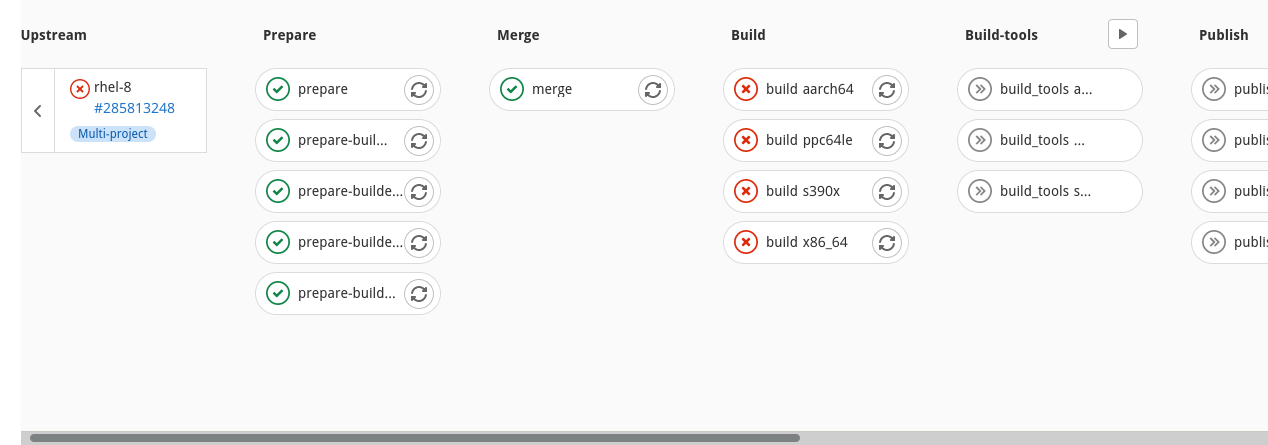

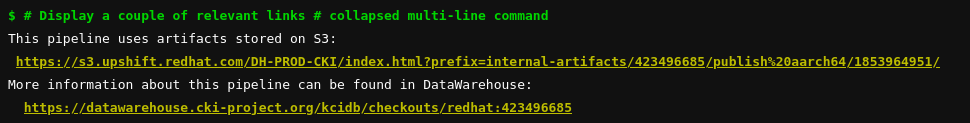

Any failed jobs will be marked with a red cross mark. Click on them and follow the output to find out what happened. If the job output is not sufficient, non-CVE pipelines also have complete logs available in DataWarehouse. A direct link is available at the end of the GitLab job output:

For more information, see the detailed debugging guide.

What is {realtime,automotive}_check and what should I do when it fails?

These checks are in place to give the real time and automotive kernel teams a heads up about conflicts with their kernel branches. No action from the developers is required at this stage and failures are not blocking. The real time and automotive teams will contact you if they need to follow up.

How do I send built kernels to partners?

Open the DataWarehouse entry for your pipeline. A direct link is available in any GitLab job output at the bottom:

From there, open the entry of the build you’re interested in, based on the

architecture and debug options. A link to a yum/dnf repofile, a direct URL

consumable by yum/dnf, and a browsable kernel repository are all available

in the “Output files” table. You can use any of these to retrieve the desired

packages (pick whichever option you’re most comfortable with).

Once you have retrieved the packages you can forward these builds to partners using the same process you used to forward builds before.

NOTE: CVE run data is not saved in DataWarehouse. If you absolutely need to send CVE builds to partners, you can retrieve all links described above from the

publishjobs in the GitLab pipeline. Same as with DataWarehouse, pick the neededpublishjob based on the architecture and debug options. Be very careful when sharing embargoed content!

A test failed and I don’t understand why!

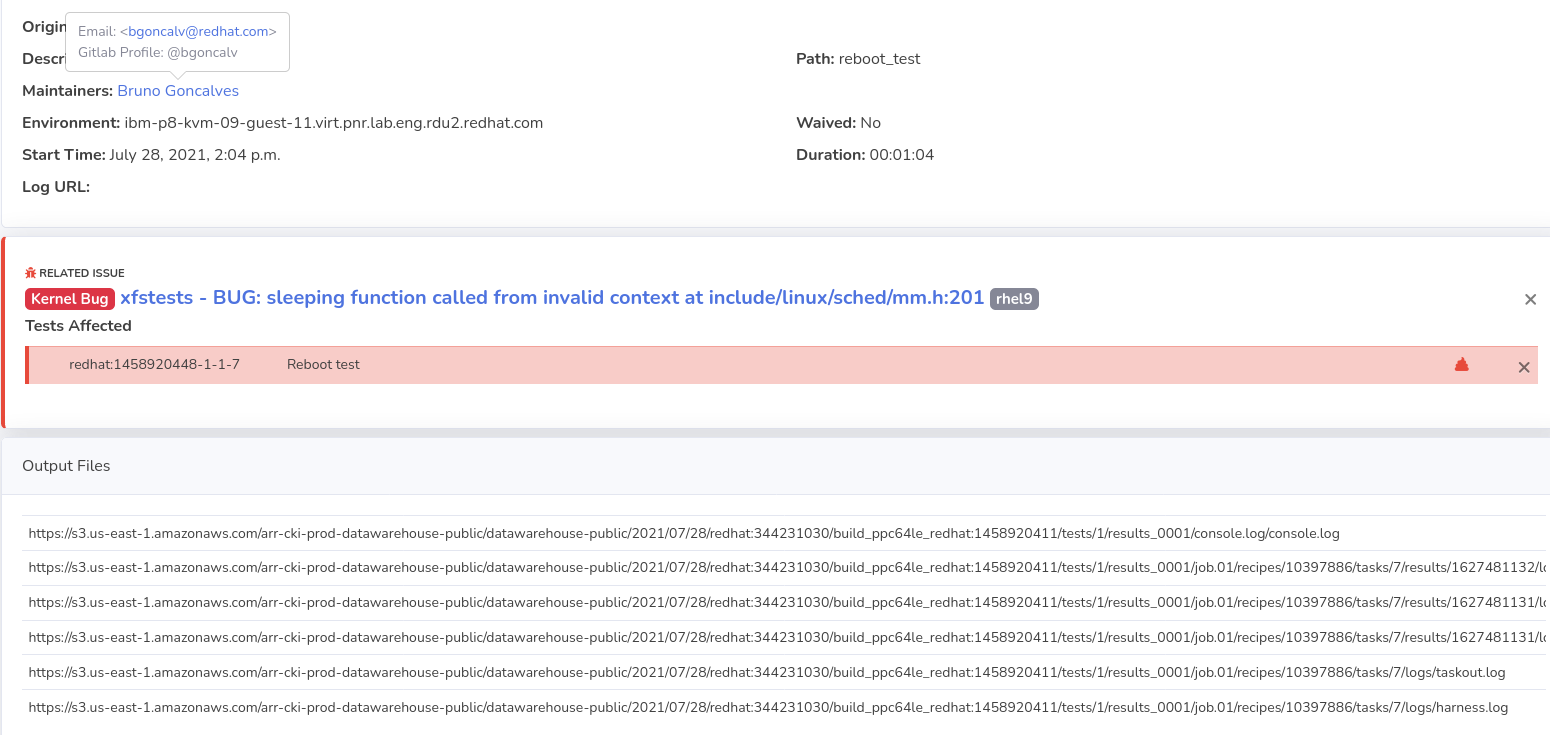

If result checking is enabled (last job of the pipeline is check-kernel-results),

follow the DataWarehouse link in the job console output. You can find all test

logs available there, as well as contact information of the test maintainers:

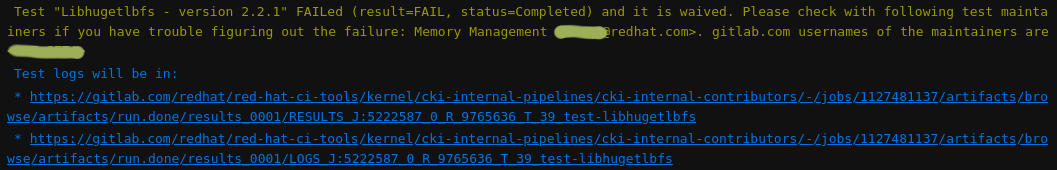

If result checking is not enabled (e.g. during CVE process), links to test logs and maintainer information will be printed in the test job:

You can contact the test maintainers if you can’t figure out the failure reason from the logs yourself.

Why is my test/pipeline taking so long?

CKI runs tests across a large pool of dedicated systems. While systems are constantly added to this pool there are occasionally times where the number of jobs/pipelines may exceed the pool’s queue capacity (for example, at the end of a release cycle). In addition to this, CKI updates and infrastructure failures may also impact job/pipeline completion times. As a result of these issues, you may occasionally experience delays in job/pipeline completion.

Users with jobs/pipelines that are waiting for resources should be patient for their jobs/pipelines to be executed in the queue. If you are concerned that your job or pipeline is no longer responding, you can contact the CKI team for help.

How do I retry a job?

Jobs can be retried from both the pipeline overview and specific job views. In the pipeline overview, you can click on the circled double arrows to retry specific jobs:

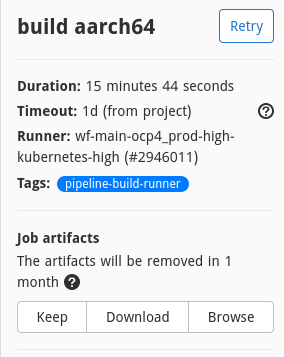

If you already have a specific job open, click on the Retry button on the

right side:

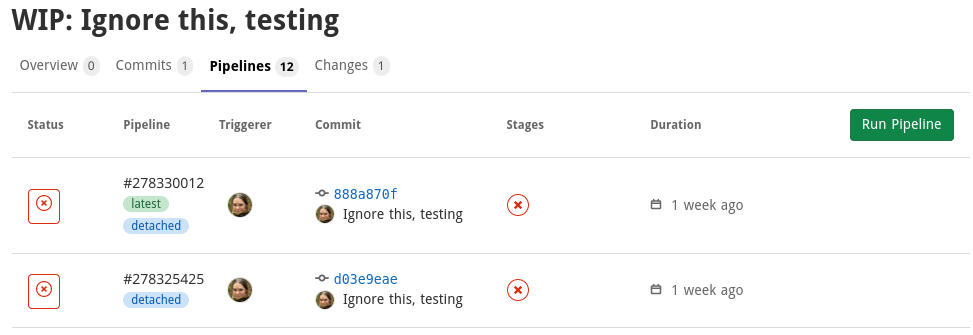

How do I retry a pipeline?

Go into the Pipelines tab on your merge request and click on the

Run pipeline button:

Steps for developers to follow to get a green check mark?

- Look into the failure as outlined in the first step.

- If the failure is caused by your changes, push a fixed version of the code. A new CI run will be automatically created.

- If the failure reason looks to be unrelated to your changes, the failure

needs to be waived:

- If you are not working on a CVE, ask the test maintainer to review the

failure, the test maintainer should add it to a new known issue in

DataWarehouse. Afterwards, restart the

kernel-resultstage at the end of the pipeline to force result reevaluation. There is no need to rerun the testing or even the complete pipeline! - If you are working on a CVE, explain the situation in a comment on your merge request. You can still look into DataWarehouse for known issues yourself, however the automated detection is disabled due to security concerns.

- More details on how to find the test logs or test maintainers contact are available at “A test failed and I don’t understand why” section.

- If you are not working on a CVE, ask the test maintainer to review the

failure, the test maintainer should add it to a new known issue in

DataWarehouse. Afterwards, restart the

How to customize test runs?

Modify the configuration in .gitlab-ci.yml in the top directory of the kernel

repository. The list of all supported configuration options (“pipeline

variables”) is available in the configuration documentation.

Don’t forget to revert your customizations when marking the MR as ready!

I need to keep the artifacts for longer

If you know the default 6 months is not enough for you case, you can proactively

prolong the lifetime of the artifacts. Before the artifacts disappear, retry

the publish jobs in the pipeline. This will keep the build artifacts for

another 6 months since the retry. This is only possible to do once - the job

depends on the previous pipeline jobs and those have the same lifetime.

See the section below if the artifacts already disappeared and you need to regenerate them.

I need to regenerate the artifacts

There are two ways to regenerate the artifacts:

-

Submit a new pipeline run, either by pushing into your branch or by clicking the

Run pipelinebutton on your MR. This method will execute the full pipeline, which means testing will run again and the MR will be blocked until it’s finished. -

Sequentially retry all pipeline jobs up till (and including) the

publishstage. This can be done on a per-stage basis, i.e. retrying allprepareorbuildjobs at once. Stages are visually defined as columns in the pipeline view.