Reproducing and debugging kernel builds

CKI uses container images to allow building kernels for different OS releases

independent of the local environment. Before starting, install podman to be

able to pull and run the container images:

dnf install -y podman

If your distribution lacks

podman, you may be able to usedockerinstead. The interface of the two tools is almost identical and you should be able to get going by substitutingpodmanbydockerin the example commands. Do note that usingdockeris not officially supported.

Finding the correct job

There are multiple places in the pipeline where the builds are run. Most likely, you want to reproduce the failed builds which are marked with the red cross. If you need to reproduce a successful build, you need to get the correct job in the pipeline first:

- Base kernel build: Open the desired architecture in the

buildstage. - Kernel tools builds for non-

x86_64architectures: Open the desired architecture in thebuild-toolsstage.

Download the builder container image locally

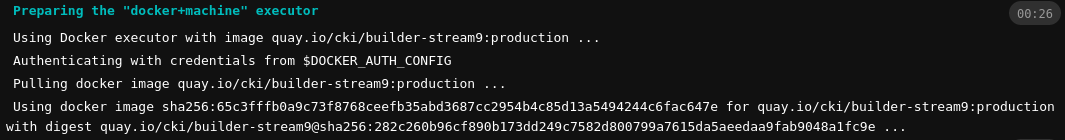

Information about which container image and tag was used to build the kernel is provided in the job we found in the previous step, at the top of the logs:

Use podman to download the container image from the last line:

podman image pull <IMAGE_NAME_AND_TAG>

e.g. the command from the screenshot example would be

podman image pull quay.io/cki/builder-stream9@sha256:282c260b96cf890b173dd249c7582d800799a7615da5aeedaa9fab9048a1fc9e

Accessing private container images

The “production” and “latest” tags for all RHEL container images are accessible on the Red Hat VPN.

Details can be found on the internal companion page.

Reproducing the build

Start the container

podman run -it --rm <IMAGE_NAME_AND_TAG> /bin/bash

The container will be deleted automatically (--rm option) after exiting.

Get the kernel rebuild artifacts

If you are rebuilding a tarball or source RPM, you need to clone or copy the git repository into the container. If you already have the tree cloned locally you can mount it into the container directly when starting it by adjusting the command line to

podman run -it --rm <IMAGE_NAME_AND_TAG> -v ".:$PWD:z" -w "$PWD" /bin/bash

This mounts your local directory into the container under the same path as it is present locally.

If you are reproducing the RPM build or tools, you need to retrieve the built source RPM. The source RPM is available in the output files of the build in DataWarehouse.

You can either run the commands (e.g. curl) in the container directly, or in

case you already have the artifacts present locally, use podman to copy the

artifacts over. Run the command from outside the container:

podman cp <LOCAL_FILE_PATH> <CONTAINER_ID>:<RESULT_FILE_PATH>

You can retrieve the <CONTAINER_ID> from

podman container list

NOTE:

podman cpcan also be used to copy artifacts out of a running container, similarly to how regularcpcommand works.

You can also first rebuild the source RPM from git and then reproduce the actual build if you wish so.

Get the kernel configuration

For RPM-built kernels, the default configuration for a given architecture is used. The configuration is part of the built source RPM so no steps are needed here.

For kernels built as tarballs, the config file used to build the kernel is

available in DataWarehouse. Retrieve the config file the same way as the

kernel tarball or source RPM in the previous step and name it as .config in

the kernel repository.

Export required variables

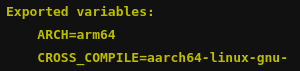

For build and build-tools jobs, some environment variables are needed to

properly reproduce the build. These are printed in the job output:

Export the same variables in the container. If the CROSS_COMPILE variable is

not exported, the kernel build ran on a native architecture. In that case, you

need to run the container on the same architecture to properly reproduce the

build.

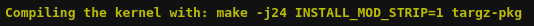

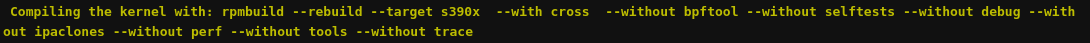

Run the actual build command

The command used to build the kernel is printed in the job logs and also

available on the build details in DataWarehouse. Some examples of the tarball

build and rpmbuild commands in the GitLab job output:

Copy and run the command. For the rpmbuild commands, you have to append the

source RPM path to the end.

Local reproducer does not work

If you fail to reproduce the builds locally and need to access the pipeline run directly to debug what is going on, reach out to the CKI team. The process to get access is described in the debugging a pipeline job documentation.