Full picture of CKI merge request testing

Currently, kernel merge request testing is only set up on GitLab.com, and as such this guide will reference it directly. Administrative setup can be found under the documentation for pipeline triggering; this documentation is aimed at kernel developers.

Contacting the CKI team

Throughout this guide, contacting the CKI team is mentioned several times.

You can do so by tagging the @cki-project group on gitlab.com, otherwise by

sending an email to cki-project@redhat.com. Red Hat associates can also ping

us in the internal Slack channel: #team-kernel-cki.

Please avoid contacting individual team members of the project, as it’s often

faster to resolve threads in group!

Trusted contributors

If you are a member of a GitLab project with CKI CI enabled (e.g.

kernel-ark or centos-stream-9), you are

considered a trusted contributor. As a trusted contributor, you get

permissions to trigger testing pipelines in specific CKI pipeline projects.

The created pipelines will be accessible from the merge request.

Trusted contributors may override the pipeline configuration by changing the

variables sections of the .gitlab-ci.yml file. This can be done as a

one-off run you revert from your MR, or as part of your changes (e.g. as

kernel-ark switched from make rh-srpm to make dist-srpm SRPM target).

Check out the configuration option documentation for the full list of

available options. More details about this functionality are available in the

customized CI runs documentation below.

External contributors

If you are not a member of a CKI-enabled project, but submit merge requests to one, you are considered an external contributor. In this case, a bot will leave a comment explaining which project membership is required to be considered a trusted contributor.

Limited testing pipeline

The bot will trigger a limited testing pipeline with a predefined

configuration and link it in another comment. The comment with

the pipeline status will be updated as the pipeline runs. The bot will create a

new pipeline after any changes to your merge request. Changes to the

.gitlab-ci.yml file are not reflected in the pipelines created by the bot

due to security concerns.

Configuration options used for external contributors’ pipelines are stored in the pipeline-data repository and any changes to them need to be ACKed by either kernel maintainers or the CKI team.

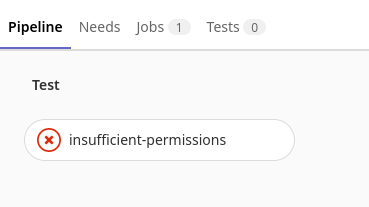

For external contributors, the full testing pipelines will fail with permission issues:

Reviewers can trigger a full testing pipeline by going into the “Pipelines” tab on the MR and clicking the “Run pipeline” button.

This will include any changes to the .gitlab-ci.yml file from the merge request.

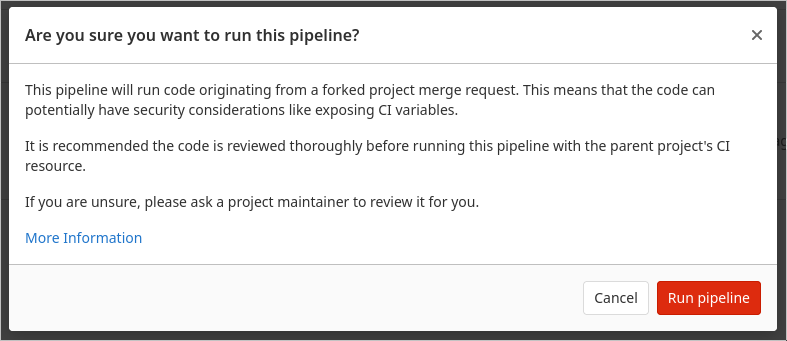

In the background, GitLab’s feature that allows running pipelines for MRs from

forks in the parent project is used. Because of that, the following warning

needs to be acknowledged:

A successful full pipeline is required in order to merge the MR.

What does a high level CI flow look like?

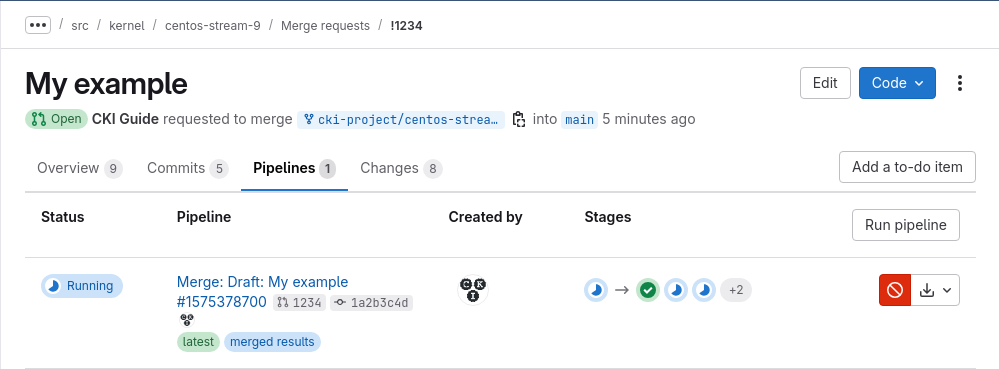

If you want any kernels built or tested, you submit a merge request. This is used as an explicit “I want CI” request as opposed to testing any pushes to git branches, to conserve resources when no testing is required. Merge requests marked as “Draft:” are also tested. These are useful if your changes are not yet ready or are a throwaway (e.g. building a kernel with a proposed fix to help support engineers). Once you submit a merge request, a CI pipeline will automatically start.

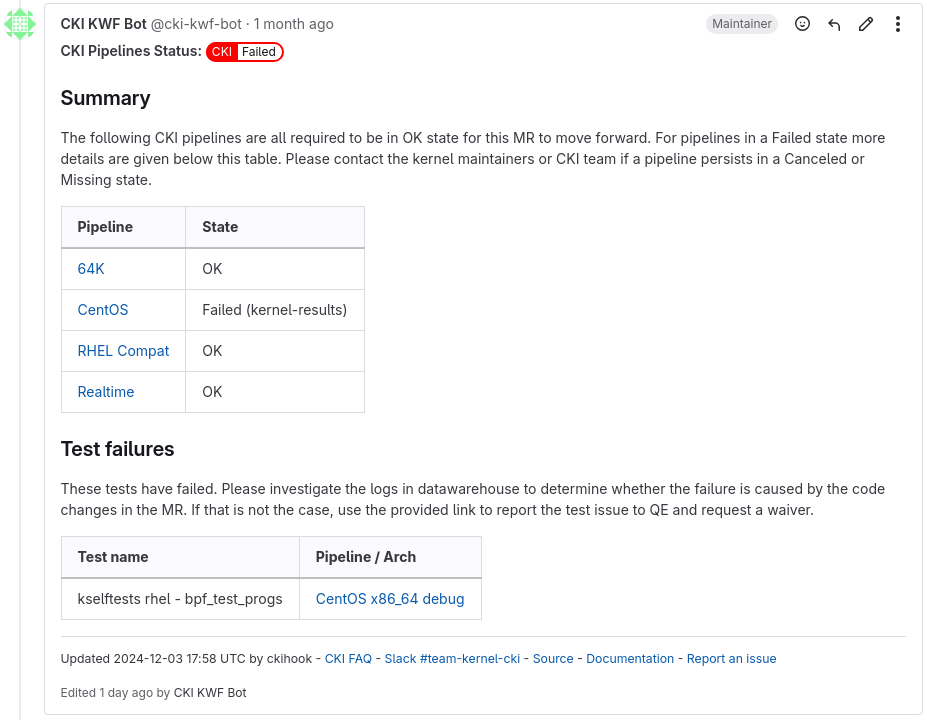

You can follow its progress through the comment left in your MR by @cki-kwf-bot, saying “CKI Pipelines Status” at the top, which will be updated automatically whenever the pipeline status changes and once it’s done, it will have hyperlinks to all relevant records in DataWarehouse.

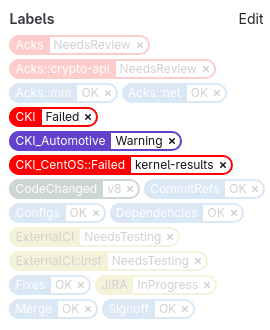

You can see that right after “CKI Pipelines Status” at the top there’s a red

badge saying CKI::Failed. That’s one of the labels your MR will be tagged with

by the KWF bot, which in regard to CKI, you want to see a blue CKI::OK, can

pretty much ignore any purple CKI*::Warning because they are only relevant

to their respective team, and you shall dread seeing any CKI*::Failed::*,

which alongside the comment mentioned above, will summarize the failed outcome

for one of the evaluated kernel variants.

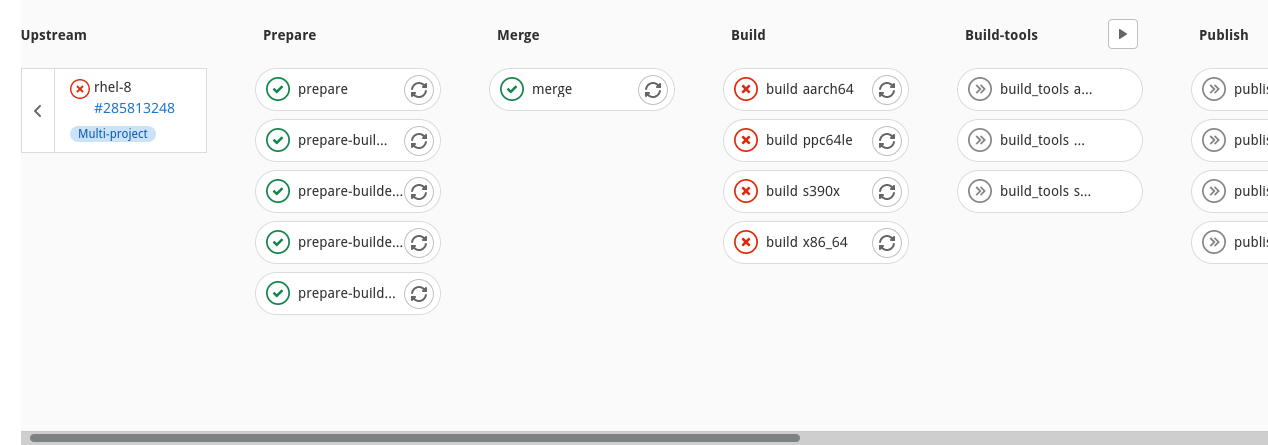

Finally, you can also look directly in the pipeline to follow its progress. The MR CI process utilizes multi-project pipelines, and thus you’ll see a very condensed view. Each MR pipeline triggers multiple downstream pipelines, which each evaluate a kernel variant under a set of different architectures. To view one of the downstream pipelines in full, click on one of the arrows on the right side of one the boxes that says “Multi-project”.

Note: If you are working on a CVE, results are not pushed to DataWarehouse, so detailed output and links to log files will only be available in the pipeline, more specifically, in its jobs.

A full CKI run can look different depending on which repository you are contributing to, for example, kernel-ark may try to schedule 64 untested builds in 20 downstream pipelines, while centos-stream-9 may try to schedule 24 builds with testing in 4 downstream pipelines. In some cases, there might be some additional downstream pipelines that won’t be included in the summary by the @cki-kwf-bot, more on that later

You may have to scroll to the side to see the full pipeline visualization!

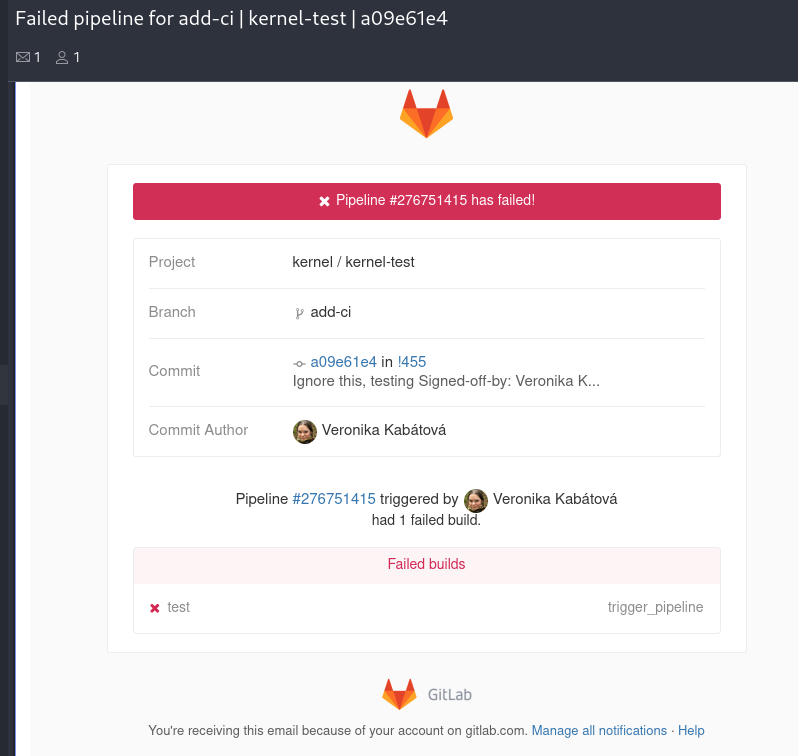

If the pipeline fails, you’ll automatically get an email from GitLab (unless you tinkered with your notification settings - if you did that, you have to verify the pipeline status manually). This is how a pipeline failure email from GitLab looks like:

Notice the clickable pipeline ID in the email (“Pipeline #276751415 triggered” in the example screenshot). Clicking on it will bring you to the condensed multiproject pipeline view, as shown in the second screenshot in this section. You also get a link pointing to your merge request ("!455" in the example). You can get to the pipeline from your merge request, however there’s no need to use it since the direct link is available.

CKI team has implemented automatic retries to handle intermittent infrastructure failures and outages. Such failed jobs will be automatically retried using exponential back off and thus no action is required from you on infrastructure failures. In general, you can rely exclusively on the bot’s comment and follow the information within its DataWarehouse hyperlinks to find out what happened and fix up the merge request if needed.

Testing of merge results

The CI pipelines test merge results, i.e. the result of merging the merge request into the target branch.

For each push, GitLab performs a shadow merge with the target branch in the

background. A notification in the merge request GUI alerts if that resulted in

any conflicts. The pipeline will still start if a merge conflict occurs, but

the merge stage will fail with an appropriate error message in the logs. In

that case, rebase your merge request to resolve the conflicts.

The shadow merge will not be updated if the target branch changes. If you require a new run with newly merged changes, start a new pipeline or rebase your MR.

Non-blocking pipelines

When applicable, there will be extra pipelines named realtime_check or

automotive_check available for the merge request as well. The purpose of the

pipelines is to check if your merge request is compatible with the real time

and automotive kernels. Failures in these pipeline are not blocking. They

are only an indicator for the real time and automotive kernel maintainers that

either their branch is out of sync with the regular kernel, or that they will

run into problems when merging your changes into their trees. You can ignore

the realtime_check and automotive_check pipelines, the real time or

automotive kernel team will contact you if they need to follow up.

If the regular pipeline passes but the automotive kernel check fails (as shown in the example screenshot above), you will see a different marking in the GitLab web UI of your merge request:

Like mentioned before, the @cki-kwf-bot will not report the outcome of those non-blocking pipelines in its summarizing comment.

Testing outside of CI pipeline

Not all kernel tests are onboarded into CKI and thus they can’t run as part of the merge request pipeline. Please consider automating and onboarding as many kernel tests as possible to increase the test coverage and avoid having to manually trigger the tests every time they are needed.

For testing outside of CI pipelines, developers, QE and users are encouraged to

reuse kernels built by CKI. Kernel tarballs or RPM repository links (usable by

dnf and yum) are provided in DataWarehouse and the output of publish

jobs. A browsable repository URL is also provided.

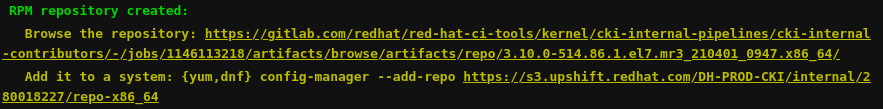

Example of URLs linked in the job output:

The first URL links to a browsable artifact page. This can use the GitLab artifacts web interface, or an external S3 storage. The second URL links to a yum/dnf repo file. Both links are also provided to QE in the Bugzilla/JIRA associated with the MR.

Access to the GitLab links is limited by GitLab authentication and requires credentials for RHEL artifacts. Both S3 storage and the repo files are accessible without authentication - public artifacts are available to anyone and RHEL artifacts require internal access from the Red Hat VPN.

UMB messages for built kernels

CKI is providing UMB messages for each built kernel. No extra authentication to access the provided kernel repositories is needed. Note that merge request access (e.g. to check the changes or add a comment with results) does require authentication and you may want to set up an account to provide results back on the merge request. If you’d like to start using the UMB messages or have any requests about them, please check out the UMB messenger or open an issue there.

Note that no UMB messages are going to be sent for any embargoed content as

doing so would make the sensitive information available company wide. If you

are assigned to test any sensitive content, you need to grab the kernel repo

files from the merge request pipelines (publish jobs) or Bugzilla/JIRA

manually.

Testing RHEL content outside of the firewall

There are cases where RHEL kernel binaries need to be provided to partners for testing. As private kernel data is only available to Red Hat employees, manual steps similar to the original flow using Brew are needed here.

Follow the step by step guide in the FAQ to do this.

Targeting specific hardware

CKI automatically picks any supported machine fitting the test requirements. This setup is good for default sanity testing, but doesn’t work if you need to run generic tests on specific hardware. One such example is running generic networking tests on machines with specific drivers that are being modified. If you feel a commonly used mapping is missing and would be useful to include please reach out to the CKI team or submit a merge request to kpet-db. You can find more details about the steps involved in adding the mappings in the kpet-db documentation.

Debugging and fixing failures - more details

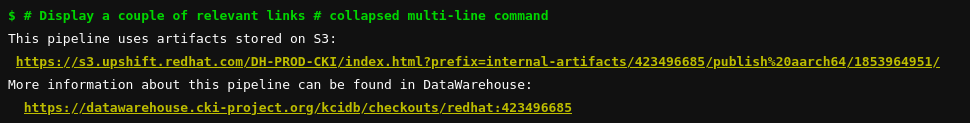

To check failure details, click on the pipeline jobs marked with ! or a red

X. Those are the ones that failed. You’ll see output of the pipeline, with

some notes about what’s being done. Some links (like with the aforementioned

kernel repositories) may also be provided. Extra logs (e.g. full build output)

will usually be available in DataWarehouse. Check those out to identify the

source of the problem if the job output itself is not sufficient. A direct link

to DataWarehouse entry for your pipeline is provided towards the end of the

GitLab job output:

Fix any issues that look to be introduced by your changes and push new versions (or even debugging commits). Testing will be automatically retried every time.

No steps are needed for infrastructure failures as affected jobs will be

automatically retried. Infrastructure issues include (but are not limited to)

e.g. pipeline jobs failing to start, jobs crashing due to lack of resources on

the node they ran on, or container registry issues. If in doubt, feel free to

retry the job yourself. You can find the Retry button at the top right corner

of the opened job.

Note the retry button doesn’t pull in any new changes (neither kernel, nor CKI) and retries the original job as it was before. This is to provide a semi-reproducible environment and consistency within the pipeline. Essentially, only transient infrastructure issues can be handled by retrying. To pull in any new changes, you need to trigger a new pipeline by either pushing to your branch or clicking on the

Run pipelinebutton in the pipeline tab on your merge request.

Build failures

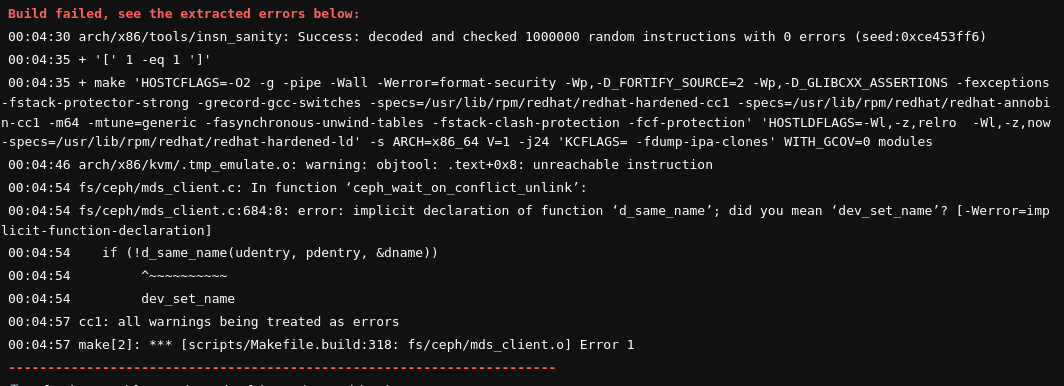

Build errors from the full log are extracted and printed out in the GitLab job:

The shortened version of this output is also available in the DataWarehouse view of the failed build.

If no errors could be extracted, the full build log from the artifacts can be used to identify the source of the failure. Please do reach out in these cases so the team can update the extraction script to handle the new cases.

Test failures

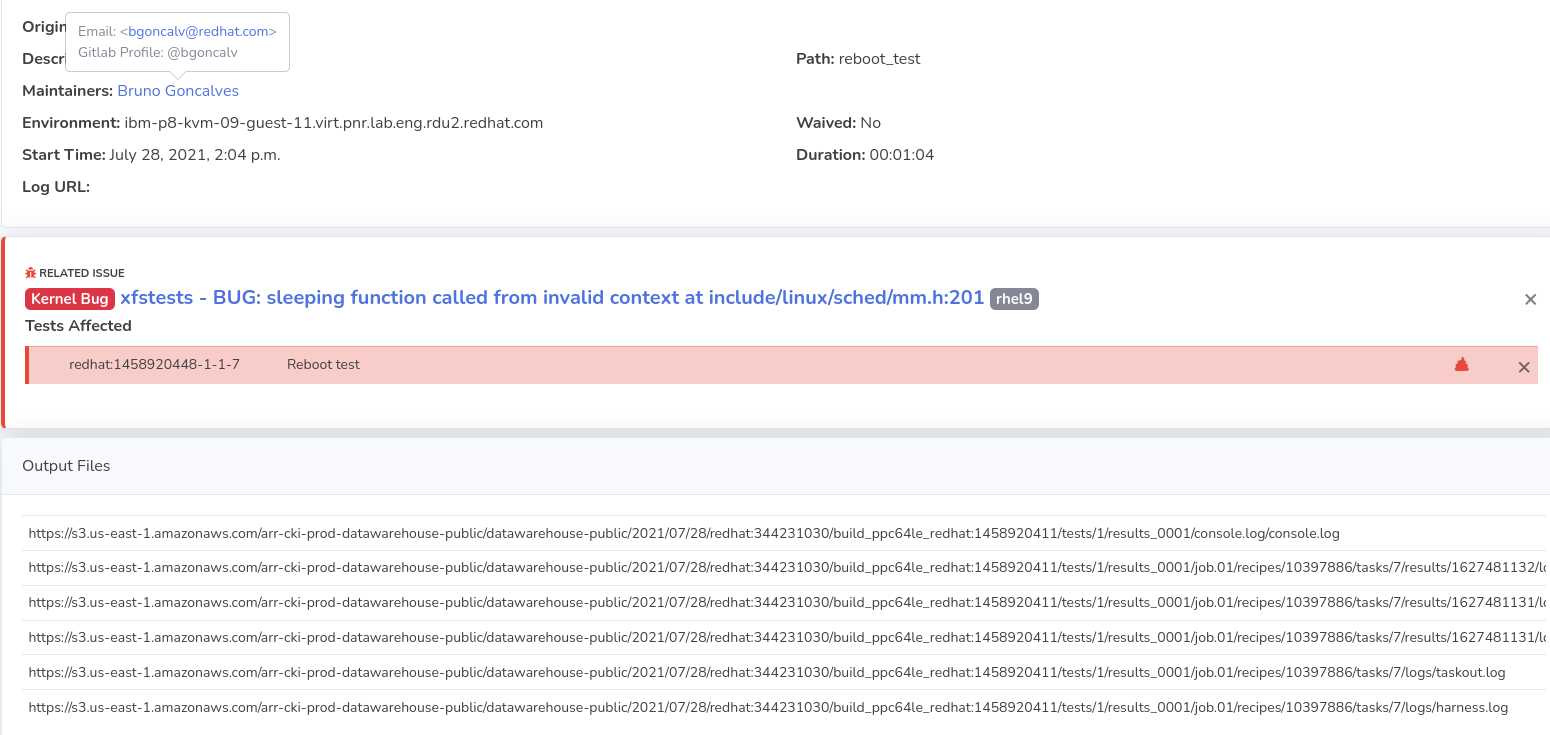

Test logs are linked from the test stage jobs, as well as in DataWarehouse. If you’re having issues finding out the failure reason, please reach out to the test maintainers. Test maintainer information is directly available in both of these locations. Name and email contacts are be available for all maintainers. If the maintainers provided CKI with their gitlab.com usernames, these are logged as well so you can tag them directly on your merge request.

Note: If you are working on a CVE, evaluate the situation carefully, and do not reach out to people outside of the assigned CVE group!

Example of test information in DataWarehouse, linked from the result checking job:

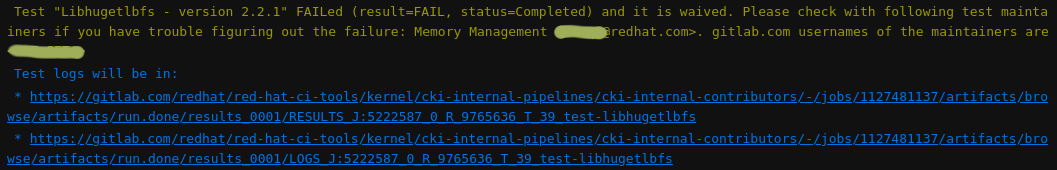

If you are working on a CVE, results are not pushed to DataWarehouse and log links and maintainer information are only available in the test job output:

In the example above you can see the test status, maintainer information and direct links to the logs of the failed test. In this case, the test is waived and thus its status does not cause the test job to fail. Tests which are not waived say so in the message and the message is in red letters, as you can see in the example below. The rest of the message is same.

Known test failures / issues

Tests may fail due to already existing kernel or test bugs or infrastructure issues. Unless they are crashing the machines, we’ll keep the tests enabled for two reasons:

- To not miss any new bugs they could detect

- To make QE’s job easier with fix verification: if a kernel bug is fixed the test will stop failing and it will be clearly visible

After testing finishes, the results and logs are submitted into DataWarehouse and checked for matches of any already known issues. If a known issue is detected, the test failure is automatically waived. Pipelines containing only known issues will be considered passing.

It’s the test maintainers’ responsibility to submit known issues into the database. If a new issue is added, untagged failures from the past two weeks are automatically rechecked and marked in the database if they match. The added issue will also be correctly recognized on any future CI runs.

If you don’t have permissions to submit new issues and think you should have them, reach out to the CKI team.

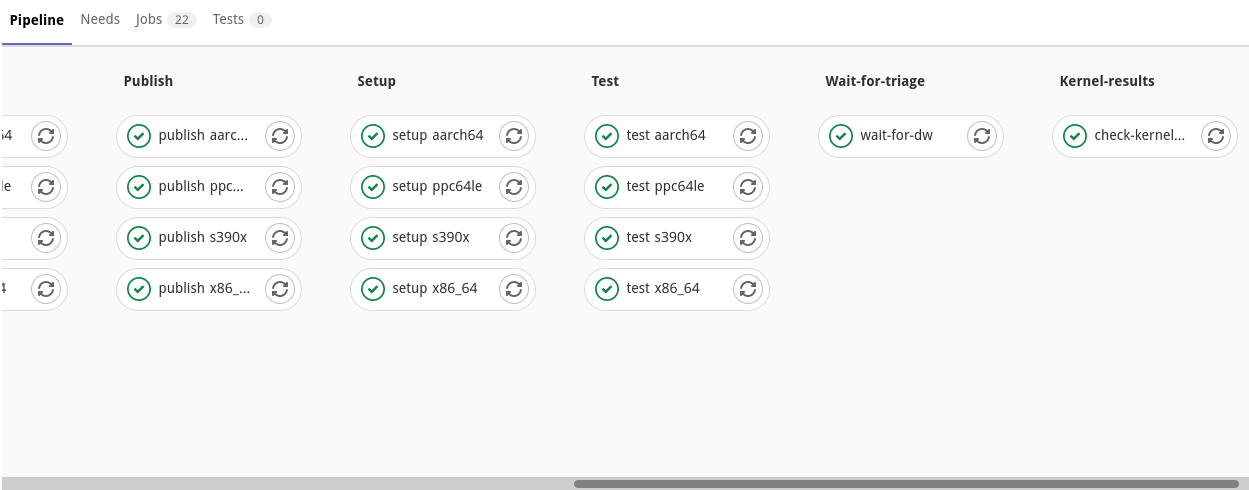

Once all issues related to a run are tagged, the ckeck-kernel-results job

in the pipeline should rerun to unblock the MR. This stage is available at the

end of the pipeline:

The job queries DataWarehouse for updated results and prints a human friendly report.

Due to security concerns, only non-CVE testing has integrated known issue detection. If you are working on a CVE, you have to review the test results manually. The test result database is still available if you want to check for known issues yourself. There will be a clear message in the job logs stating so if that’s the case.

Blocking on failing CI pipelines

No changes introducing test failures should be integrated into the kernel. All observed failures need to be related to known issues. If a failure is not a known issue, it needs to be fixed before the merge request can be included. If the new failure is unrelated to the merge request, it should be submitted as a new known issue in DataWarehouse.

CI pipeline needs to be changed

-

Your changes are adding new build dependencies. New packages can’t be installed ad-hoc, they need to be added to the builder container. If you can, please submit a merge request with the new dependency to the container image repository.

-

Your changes require pipeline configuration changes. This is the case of the

make dist-srpmexample from above. Check out the configuration option documentation and adjust the.gitlab-ci.ymlfile in the top directory of the kernel repository. -

Your changes are incompatible with the current process and the pipeline needs to be adjusted to handle it, a new feature is requested, or a bug is detected. This should rarely happen but in case it does, please contact the CKI team to work out what’s needed.

If you need any help or assistance with any of the steps, feel free to contact the CKI team!

Customized CI runs

CI runs can be customized by modifying the configuration in .gitlab-ci.yml

file in the top directory of the kernel repository. The configuration option

documentation contains the list of all supported variables with information on

how to use them.

This can be useful for faster testing of Draft: merge requests. Unless these

changes are required due to kernel or environment changes, they should be

reverted before the merge request is marked as ready.

Most common overrides include (but are not limited to):

architectures: Limit which architectures the kernel is built and tested for.skip_test: Skip testing, only build the kernel.test_set: Limit which tests should be executed.

The variables mentioned above imitate Brew builds and testing.

It is currently not possible to easily adjust merge request pipelines without modifying the CI file. GitLab has an issue open about this feature.

Future plans

Blocking on missing targeted testing

Every change needs to have a test associated with it (or at least one that

exercises the area) to verify it doesn’t regress. You can find whether such

tests are already onboarded into CKI based on the presence of a

TargetedTestingMissing label on your merge request.

If targeted testing is deemed missing, a full list of uncovered files is

available for review in any of the setup jobs of the pipeline.

Please work with your QE counterparts to create and onboard tests so your changes can be properly tested.

Targeted testing requirements for specific files can be explicitly waived in kpet-db. This could be done if the file changes don’t make sense to be tested (e.g. kernel documentation sources) or if there is no feasible way to have any sort of automated sanity test onboarded. Not having time to onboard a test is not an excuse to waive the targeted testing requirement! If you do wish to waive the requirement for specific files, please submit an issue or a merge request to kpet-db with a clear explanation of why the requirement is not reasonable for the files.